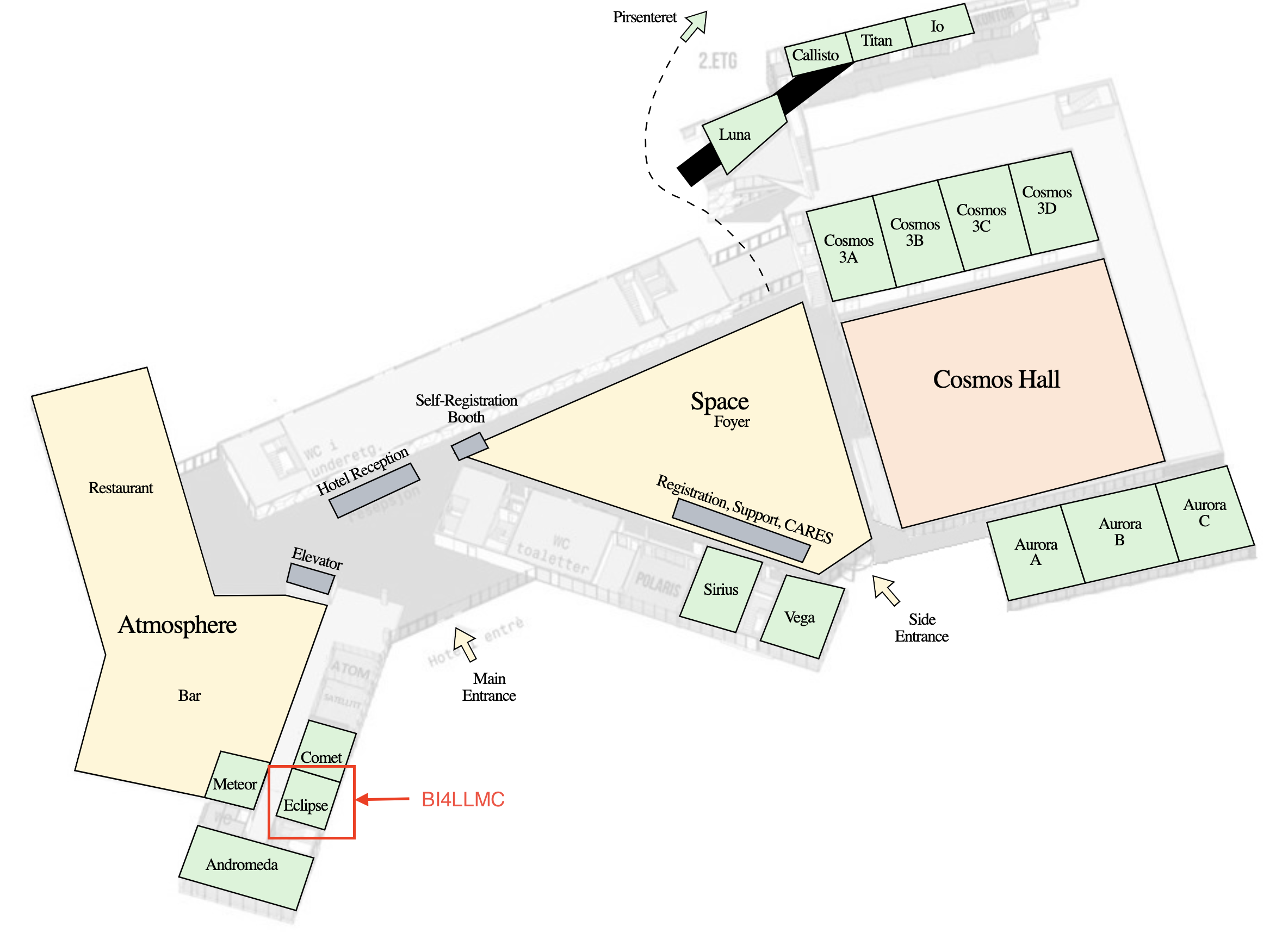

About BI4LLMC

Large Language Models for code (LLMc) have transformed the landscape of software engineering (SE), demonstrating significant efficacy in tasks such as code completion. However, despite their widespread use, there is a growing need to thoroughly assess LLMc, as current evaluation processes heavily rely on accuracy and robustness metrics, lacking consensus on additional influential factors in code generation. This gap hinders a holistic understanding of LLMc performance, impacting interpretability, efficiency, bias, fairness, and robustness. The challenges in benchmarking and data maintenance compound this issue, highlighting the need for a comprehensive evaluation approach.

How can we standarize, evolve and implement benchmarks for evaluating LLMc and multi-agent approaches for code generation?

This is the question we aim to investigate in the workshop BI4LLMC. The BI4LLMC 2024 workshop is the first workshop and provides a venue for researchers and practitioners to exchange and discuss trending views, ideas, state-of-the-art, work in progress, and scientific results highlighting aspects of software engineering and AI to address the problem of data quality in modern systems.

Topics of Interest

- Data and curating processes for benchmarks to evaluate LLMc

- Practices to collect and maintain datasets to evaluate LLMc

- Protocols and evaluation metrics beyond accuracy for LLMc

- Best practices from practitioners and industry for conducting research related to benchmarking LLMc

- Automated tooling and Infrastructure for Evaluating LLMc

- Agent and multi-agent for code generation benchmarking